MYSQL主从搭建

一、试验环境

新搭建两台机器(从71上链接克隆过去)

MYSQL主:jx-mysql-master-36(10.10.10.36/24)

MySQL备:jx-mysql-slave-37(10.10.10.37/24)

二、搭建环境

安装数据库

yum install -y mariadb-server

systemctl restart mariadb 修改密码

[root@jx-mysql-master-36 ~]# mysqladmin -uroot password 123456主数据库开启binlog

[root@jx-mysql-master-36 ~]# vim /etc/my.cnf.d/mariadb-server.cnf

[mysqld]

log-bin=jx

server-id=36

binlog_do_db=jxcms修改完之后需要重启数据库

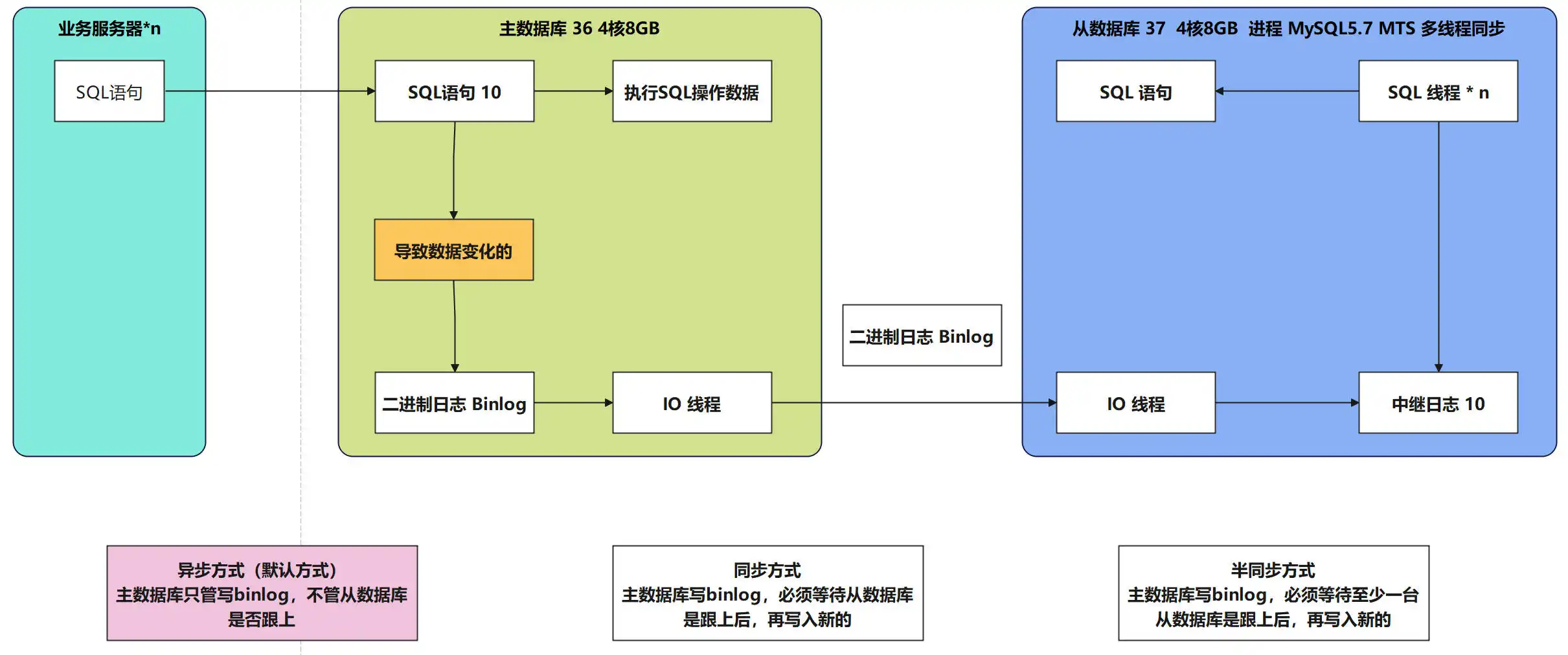

systemctl restart mariadb binlog工作过程:

开启binlog后无论做什么操作,binlog都会增加,Position会一直增加。

举例说明

进入数据库,查看binlog状态,重启binlog并记录下此时binlog状态。创建jx_cms数据库,查看binlog状态,删除jx_cms数据库再次查看binlog状态。

MariaDB [(none)]> show master status;

+-----------+----------+--------------+------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+-----------+----------+--------------+------------------+

| jx.000002 | 321 | jxcms | |

+-----------+----------+--------------+------------------+

1 row in set (0.000 sec)

MariaDB [(none)]> reset master;

Query OK, 0 rows affected (0.018 sec)

MariaDB [(none)]> show master status;

+-----------+----------+--------------+------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+-----------+----------+--------------+------------------+

| jx.000001 | 321 | jxcms | |

+-----------+----------+--------------+------------------+

1 row in set (0.000 sec)

MariaDB [(none)]> create database jxcms;

Query OK, 1 row affected (0.000 sec)

MariaDB [(none)]> show master status;

+-----------+----------+--------------+------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+-----------+----------+--------------+------------------+

| jx.000001 | 452 | jxcms | |

+-----------+----------+--------------+------------------+

1 row in set (0.000 sec)

MariaDB [(none)]> drop database jxcms;

Query OK, 0 rows affected (0.001 sec)

MariaDB [(none)]> show master status;

+-----------+----------+--------------+------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+-----------+----------+--------------+------------------+

| jx.000001 | 581 | jxcms | |

+-----------+----------+--------------+------------------+

1 row in set (0.001 sec)最后会发现开启binlog后无论做什么操作,binlog都会增加,Position会一直增加。

现在重置恢复初始状态。

MariaDB [(none)]> reset master;

Query OK, 0 rows affected (0.019 sec)

MariaDB [(none)]> show master status;

+-----------+----------+--------------+------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+-----------+----------+--------------+------------------+

| jx.000001 | 321 | jxcms | |

+-----------+----------+--------------+------------------+

1 row in set (0.000 sec)binglog文件存储在`/var/lib/mysql/`目录内

[root@jx-mysql-master-36 ~]# cd /var/lib/mysql/

[root@jx-mysql-master-36 mysql]# ls -la

total 122928

drwxr-xr-x 4 mysql mysql 315 Dec 8 16:22 .

drwxr-xr-x 30 root root 4096 Dec 8 15:54 ..

-rw-rw---- 1 mysql mysql 16384 Dec 8 16:07 aria_log.00000001

-rw-rw---- 1 mysql mysql 52 Dec 8 16:07 aria_log_control

-rw-rw---- 1 mysql mysql 976 Dec 8 16:07 ib_buffer_pool

-rw-rw---- 1 mysql mysql 12582912 Dec 8 16:07 ibdata1

-rw-rw---- 1 mysql mysql 50331648 Dec 8 16:07 ib_logfile0

-rw-rw---- 1 mysql mysql 50331648 Dec 8 15:54 ib_logfile1

-rw-rw---- 1 mysql mysql 12582912 Dec 8 16:07 ibtmp1

-rw-rw---- 1 mysql mysql 321 Dec 8 16:22 jx.000001

-rw-rw---- 1 mysql mysql 12 Dec 8 16:22 jx.index

-rw-rw---- 1 mysql mysql 5 Dec 8 16:07 jx-mysql-master-36.pid

-rw-rw---- 1 mysql mysql 0 Dec 8 15:54 multi-master.info

drwx------ 2 mysql mysql 4096 Dec 8 15:54 mysql

srwxrwxrwx 1 mysql mysql 0 Dec 8 16:07 mysql.sock

-rw-rw---- 1 root root 15 Dec 8 16:07 mysql_upgrade_info

drwx------ 2 mysql mysql 20 Dec 8 16:07 performance_schema从服务器上更改配置,

vim /etc/my.cnf.d/mariadb-server.cnf

[mysqld]

server-id=37

重启MySQL

[root@jx-mysql-slave-37 ~]# systemctl restart mysql 主库MASTER节点上创建从数据库用于同步事件的用户:

grant replication slave on *.* to 'jxslave'@'%' identified by '123456';注意:上面SQL的作用是创建一个jxslave用户,密码为123456,并且给jxsalve用户授予replication salve权限。常用于建立复制时所需要用到的用户权限,也就是slave必须被master授权具有该权限的用户,才能通过该用户复制。

从库slave节点上告知从数据库同步参数

change master to master_host='10.10.10.36',master_user='jxslave',master_password='123456',master_log_file='jx.000001',master_log_pos=321;登录到从服务器上开启从服务器

MariaDB [(none)]> start slave;

Query OK, 0 rows affected (0.001 sec)

查看状态:

MariaDB [(none)]> show slave status \G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 10.10.10.36

Master_User: jxslave

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: jx.000001

Read_Master_Log_Pos: 321

Relay_Log_File: jx-mysql-slave-37-relay-bin.000002

Relay_Log_Pos: 548

Relay_Master_Log_File: jx.000001

Slave_IO_Running: Yes #主要看这2行

Slave_SQL_Running: Yes #主要看这2行

Replicate_Do_DB:

注意:如果slave是connecting状态那么,测试从服务器到主服务器的3306端口通不通。放开防火墙。放开之后从服务器上重启io进程(stop slave; start slave)。

下面做个测试:主创建数据库,查看从上有没有同步过来。

master:

MariaDB [(none)]> create database jxcms;

Query OK, 1 row affected (0.000 sec)

MariaDB [(none)]> show master status;

+-----------+----------+--------------+------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+-----------+----------+--------------+------------------+

| jx.000001 | 452 | jxcms | |

+-----------+----------+--------------+------------------+

1 row in set (0.001 sec)slave:

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| jxcms |

| mysql |

| performance_schema |

+--------------------+

4 rows in set (0.001 sec)把之前的表导入主数据库

[root@jx-mysql-master-36 ~]# mysql -uroot -p123456 jxcms < /opt/jx1206/jx_class.sql 从数据库同步成功:

MariaDB [(none)]> use jxcms;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

MariaDB [jxcms]> show tables;

+-----------------+

| Tables_in_jxcms |

+-----------------+

| jx_class |

| jx_score |

| jx_stu |

| jx_subject |

| jx_test |

+-----------------+

5 rows in set (0.000 sec)

MariaDB [jxcms]> MYSQL主从同步原理

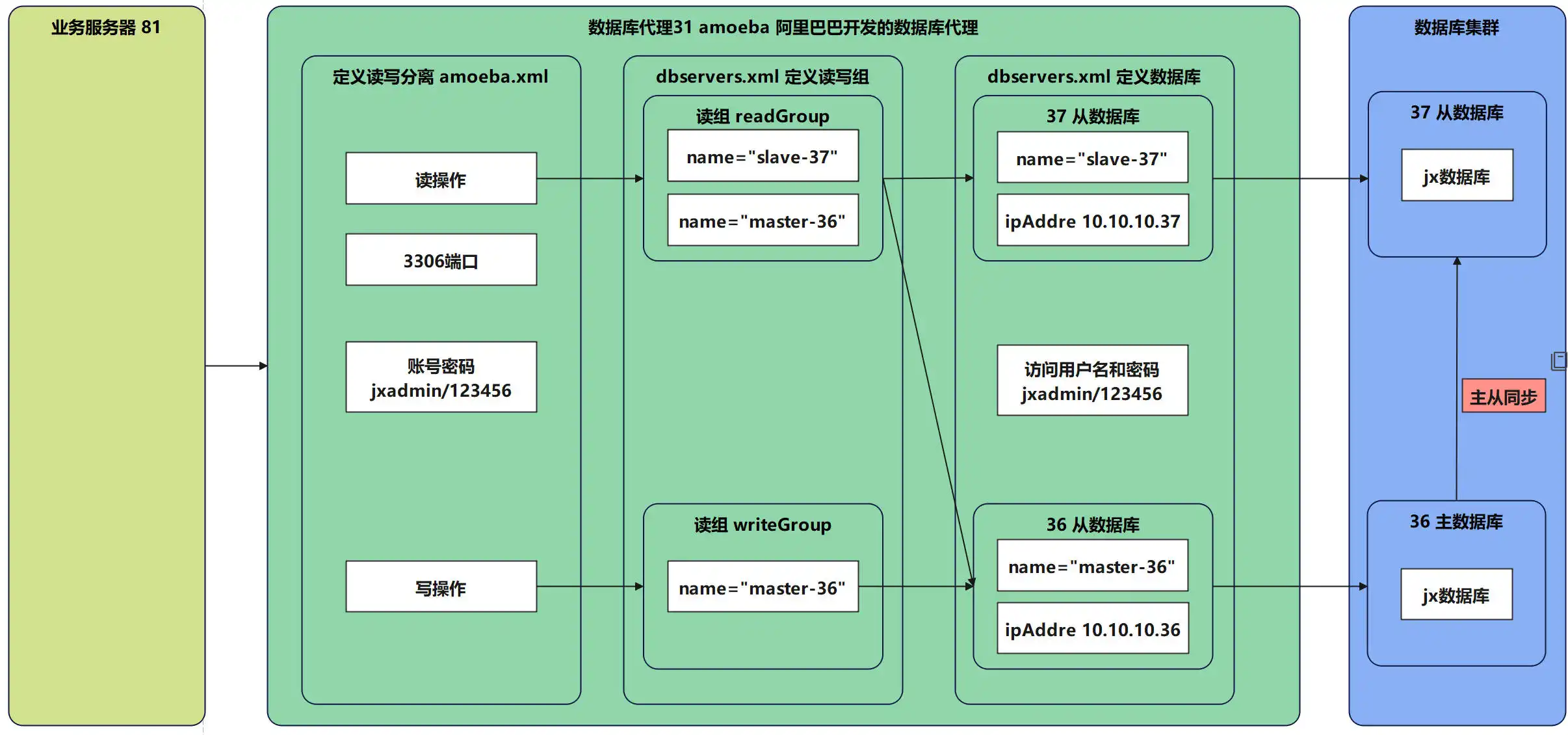

MYSQL读写分离

三、所需环境

使用上次试验的36、37主从

代理服务器(从71上克隆一台叫做jx-mysql-proxy-31,ip:10.10.10.31/24)

软件:amoeba

四、搭建过程

使用amoeba软件实现mysql读写分离:

总结:

写操作(Write) 是指对数据库数据的修改,包括插入、更新和删除。

读操作(Read) 是指从数据库中查询数据,不做修改。

在读写分离架构中,写操作发生在主数据库,读操作通常分配给从数据库。

把`amoeba-mysql-binary-2.1.0-RC5.tar.gz`通过网关服务器(254)上传到代理服务器(31)上,创建目录并解压到该目录。

# mkdir -p /opt/amoeba

# tar -xvf amoeba-mysql-binary-2.1.0-RC5.tar.gz -C /opt/amoebamaster(36)上创建一个数据库用户jxadmin,密码123456。现在数据库使用了主从模式,我们只需在主上创建用户,从上会自动同步。

# master 36上

grant all on *.* to "jxadmin"@"%" identified by "123456";

# slave 37 上查看是否有这个用户

MariaDB [(none)]> use mysql;

MariaDB [mysql]> select user,host from user;

+---------+-------------------+

| user | host |

+---------+-------------------+

| jxadmin | % |

| root | 127.0.0.1 |

| root | ::1 |

| root | jx-mysql-slave-37 |

| root | localhost |

+---------+-------------------+

5 rows in set (0.001 sec)读写分离的原理是:

用户从客户端访问进来,先到代理服务器(amoeba),如果是写动作,代理会将动作传递到master(36)上,master(36)写入后binlog中记录了这次写入,同步给slave。如果是读动作,代理会将动作随机分配到master(36)或者slave(37)上。这样相当于写都是由master处理,读是由master和slave共同承担,减轻了master负担。

开始配置代理(amoeba):

首先需要配置两个组(读组 readGroup、写组 writeGroup),master同时属于读组 readGroup和写组 writeGroup,而slave只属于读组 readGroup。

下面看代理软件配置:

conf/dbServers.xml

<factoryConfig class="com.meidusa.amoeba.mysql.net.MysqlServerConnectionFactory">

<property name="manager">${defaultManager}</property>

<property name="sendBufferSize">64</property>

<property name="receiveBufferSize">128</property>

#设置amoeba要连接mysql数据库的端口,默认3306

<!-- mysql port -->

<property name="port">3306</property>

#设置缺省的数据库

<!-- mysql schema -->

<property name="schema">jxcms</property>

#设置amoeba连接后端数据库服务器的账户密码

<!-- mysql user -->

<property name="user">jxadmin</property>

<!-- mysql password -->

<property name="password">123456</property>

</factoryConfig>

#设置后端可写的数据库

<dbServer name="master-36" parent="abstractServer">

<factoryConfig>

<!-- mysql ip -->

<property name="ipAddress">10.10.10.36</property>

</factoryConfig>

</dbServer>

#设置后端可读的数据库

<dbServer name="slave-37" parent="abstractServer">

<factoryConfig>

<!-- mysql ip -->

<property name="ipAddress">10.10.10.37</property>

</factoryConfig>

</dbServer>

#定义一个虚拟的dbserver,相当于一个dbserver组,将可读的数据库ip统一放到一个组内,可写的数据库ip统一放到一个组内。

<dbServer name="readGroup" virtual="true">

<poolConfig class="com.meidusa.amoeba.server.MultipleServerPool">

<!-- Load balancing strategy: 1=ROUNDROBIN , 2=WEIGHTBASED , 3=HA-->

<property name="loadbalance">1</property>

<!-- Separated by commas,such as: server1,server2,server1 -->

<property name="poolNames">master-36,slave-37</property>

</poolConfig>

</dbServer>

<dbServer name="writeGroup" virtual="true">

<poolConfig class="com.meidusa.amoeba.server.MultipleServerPool">

<!-- Load balancing strategy: 1=ROUNDROBIN , 2=WEIGHTBASED , 3=HA-->

<property name="loadbalance">1</property>

<!-- Separated by commas,such as: server1,server2,server1 -->

<property name="poolNames">master-36</property>

</poolConfig>

</dbServer>

conf/amoeba.xml

<?xml version="1.0" encoding="gbk"?>

<!DOCTYPE amoeba:configuration SYSTEM "amoeba.dtd">

<amoeba:configuration xmlns:amoeba="http://amoeba.meidusa.com/">

<proxy>

<!-- service class must implements com.meidusa.amoeba.service.Service -->

<service name="Amoeba for Mysql" class="com.meidusa.amoeba.net.ServerableConnectionManager">

<!-- port -->

#设置amoeba监听的端口,默认8066,#配置客户端连接amoeba时的账号,与后端服务器密码无关

<property name="port">3306</property>

<!-- bind ipAddress -->

<!-- <property name="ipAddress">10.10.10.31</property> -->

<property name="manager">${clientConnectioneManager}</property>

<property name="connectionFactory">

<bean class="com.meidusa.amoeba.mysql.net.MysqlClientConnectionFactory">

<property name="sendBufferSize">128</property>

<property name="receiveBufferSize">64</property>

</bean>

</property>

<property name="authenticator">

<bean class="com.meidusa.amoeba.mysql.server.MysqlClientAuthenticator">

<property name="user">jxadmin</property>

<property name="password">123456</property>

<property name="filter">

<bean class="com.meidusa.amoeba.server.IPAccessController">

<property name="ipFile">${amoeba.home}/conf/access_list.conf</property>

</bean>

</property>

</bean>

</property>

</service>

<!-- server class must implements com.meidusa.amoeba.service.Service -->

<service name="Amoeba Monitor Server" class="com.meidusa.amoeba.monitor.MonitorServer">

<!-- port -->

<!-- default value: random number

<property name="port">9066</property>

-->

<!-- bind ipAddress -->

<property name="ipAddress">0.0.0.0</property>

<property name="daemon">true</property>

<property name="manager">${clientConnectioneManager}</property>

<property name="connectionFactory">

<bean class="com.meidusa.amoeba.monitor.net.MonitorClientConnectionFactory"></bean>

</property>

</service>

<runtime class="com.meidusa.amoeba.mysql.context.MysqlRuntimeContext">

<!-- proxy server net IO Read thread size -->

<property name="readThreadPoolSize">20</property>

<!-- proxy server client process thread size -->

<property name="clientSideThreadPoolSize">30</property>

<!-- mysql server data packet process thread size -->

<property name="serverSideThreadPoolSize">30</property>

<!-- per connection cache prepared statement size -->

<property name="statementCacheSize">500</property>

<!-- query timeout( default: 60 second , TimeUnit:second) -->

<property name="queryTimeout">60</property>

</runtime>

</proxy>

<!--

Each ConnectionManager will start as thread

manager responsible for the Connection IO read , Death Detection

-->

<connectionManagerList>

<connectionManager name="clientConnectioneManager" class="com.meidusa.amoeba.net.MultiConnectionManagerWrapper">

<property name="subManagerClassName">com.meidusa.amoeba.net.ConnectionManager</property>

<!--

default value is avaliable Processors

<property name="processors">5</property>

-->

</connectionManager>

<connectionManager name="defaultManager" class="com.meidusa.amoeba.net.MultiConnectionManagerWrapper">

<property name="subManagerClassName">com.meidusa.amoeba.net.AuthingableConnectionManager</property>

<!--

default value is avaliable Processors

<property name="processors">5</property>

-->

</connectionManager>

</connectionManagerList>

<!-- default using file loader -->

<dbServerLoader class="com.meidusa.amoeba.context.DBServerConfigFileLoader">

<property name="configFile">${amoeba.home}/conf/dbServers.xml</property>

</dbServerLoader>

<queryRouter class="com.meidusa.amoeba.mysql.parser.MysqlQueryRouter">

<property name="ruleLoader">

<bean class="com.meidusa.amoeba.route.TableRuleFileLoader">

<property name="ruleFile">${amoeba.home}/conf/rule.xml</property>

<property name="functionFile">${amoeba.home}/conf/ruleFunctionMap.xml</property>

</bean>

</property>

<property name="sqlFunctionFile">${amoeba.home}/conf/functionMap.xml</property>

<property name="LRUMapSize">1500</property>

<!-- -->

#设置amoeba默认的池

<property name="defaultPool">writeGroup</property>

#取消注释,这里用来指定前面定义好的两个读写池

<property name="writePool">writeGroup</property>

<property name="readPool">readGroup</property>

<property name="needParse">true</property>

</queryRouter>

</amoeba:configuration>

bin/amoeba

DEFAULT_OPTS="-server -Xms256m -Xmx256m -Xss256k"xss参数需要大于128k才能启动。

启动amoeba。

[root@jx-mysql-proxy-31 ~]# /opt/amoeba/bin/amoeba start

log4j:WARN log4j config load completed from file:/opt/amoeba/conf/log4j.xml

2024-12-09 13:29:07,202 INFO context.MysqlRuntimeContext - Amoeba for Mysql current versoin=5.1.45-mysql-amoeba-proxy-2.1.0-RC5

log4j:WARN ip access config load completed from file:/opt/amoeba/conf/access_list.conf

2024-12-09 13:29:07,351 INFO net.ServerableConnectionManager - Amoeba for Mysql listening on 0.0.0.0/0.0.0.0:3306.

2024-12-09 13:29:07,354 INFO net.ServerableConnectionManager - Amoeba Monitor Server listening on /0.0.0.0:25667.

下面开始测试。开启运维服务器(81)安装mysql客户端向代理(31)请求数据。

由于我们代理软件默认设置的算法是轮询(robin),所以查询请求通过代理软件会轮流发送给master(36)和slave(37)。这个算法可以选择1=ROUNDROBIN , 2=WEIGHTBASED , 3=HA。在conf/dbServer.conf中配置。

<dbServer name="readGroup" virtual="true">

<poolConfig class="com.meidusa.amoeba.server.MultipleServerPool">

<!-- Load balancing strategy: 1=ROUNDROBIN , 2=WEIGHTBASED , 3=HA-->

<property name="loadbalance">1</property>

<!-- Separated by commas,such as: server1,server2,server1 -->

<property name="poolNames">master-36,slave-37</property>

</poolConfig>

</dbServer>

<dbServer name="writeGroup" virtual="true">

<poolConfig class="com.meidusa.amoeba.server.MultipleServerPool">

<!-- Load balancing strategy: 1=ROUNDROBIN , 2=WEIGHTBASED , 3=HA-->

<property name="loadbalance">1</property>

<!-- Separated by commas,such as: server1,server2,server1 -->

<property name="poolNames">master-36</property>

</poolConfig>

</dbServer>运维服务器连接代理软件。注意此时代理软件监听端口是3306

[root@jx-ops-81 ~]# mysql -h10.10.10.31 -ujxadmin -p123456使用查询功能,同时在代理软件(31)上开启抓包,抓3306端口。多次查询观察数据包走向。

MySQL [(none)]> select * from jx_stu;

+----+---------+--------+-----+

| id | classId | name | age |

+----+---------+--------+-----+

| 1 | 1 | 张三 | 18 |

| 2 | 1 | 李四 | 19 |

| 3 | 2 | 王五 | 20 |

| 4 | 2 | 马六 | 21 |

+----+---------+--------+-----+

4 rows in set (0.009 sec)# 第一次查询

[root@jx-mysql-proxy-31 ~]# tcpdump -i enp0s3 -p port 3306 -vv -nn

dropped privs to tcpdump

tcpdump: listening on enp0s3, link-type EN10MB (Ethernet), capture size 262144 bytes

13:43:01.483145 IP (tos 0x8, ttl 64, id 15783, offset 0, flags [DF], proto TCP (6), length 77)

10.10.10.81.38478 > 10.10.10.31.3306: Flags [P.], cksum 0x7f30 (correct), seq 2088286888:2088286913, ack 2006920818, win 501, options [nop,nop,TS val 3974975417 ecr 1230302698], length 25

13:43:01.484639 IP (tos 0x0, ttl 64, id 34595, offset 0, flags [DF], proto TCP (6), length 77)

10.10.10.31.41820 > 10.10.10.37.3306: Flags [P.], cksum 0x2897 (incorrect -> 0xfde7), seq 3778517649:3778517674, ack 4284329884, win 502, options [nop,nop,TS val 3190076538 ecr 1151198077], length 25

13:43:01.485688 IP (tos 0x8, ttl 64, id 19710, offset 0, flags [DF], proto TCP (6), length 351)

10.10.10.37.3306 > 10.10.10.31.41820: Flags [P.], cksum 0xe235 (correct), seq 1:300, ack 25, win 510, options [nop,nop,TS val 1151432309 ecr 3190076538], length 299

13:43:01.485737 IP (tos 0x0, ttl 64, id 34596, offset 0, flags [DF], proto TCP (6), length 52)

10.10.10.31.41820 > 10.10.10.37.3306: Flags [.], cksum 0x287e (incorrect -> 0x426a), seq 25, ack 300, win 501, options [nop,nop,TS val 3190076540 ecr 1151432309], length 0

13:43:01.488493 IP (tos 0x0, ttl 64, id 17617, offset 0, flags [DF], proto TCP (6), length 351)

10.10.10.31.3306 > 10.10.10.81.38478: Flags [P.], cksum 0x29d5 (incorrect -> 0xb245), seq 1:300, ack 25, win 510, options [nop,nop,TS val 1230713367 ecr 3974975417], length 299

13:43:01.489187 IP (tos 0x8, ttl 64, id 15784, offset 0, flags [DF], proto TCP (6), length 52)

10.10.10.81.38478 > 10.10.10.31.3306: Flags [.], cksum 0x1276 (correct), seq 25, ack 300, win 501, options [nop,nop,TS val 3974975423 ecr 1230713367], length 0

13:43:03.501750 IP (tos 0x8, ttl 64, id 15785, offset 0, flags [DF], proto TCP (6), length 77)

# 第二次查询

10.10.10.81.38478 > 10.10.10.31.3306: Flags [P.], cksum 0x31d6 (correct), seq 25:50, ack 300, win 501, options [nop,nop,TS val 3974977436 ecr 1230713367], length 25

13:43:03.503360 IP (tos 0x0, ttl 64, id 20279, offset 0, flags [DF], proto TCP (6), length 77)

10.10.10.31.43088 > 10.10.10.36.3306: Flags [P.], cksum 0x2896 (incorrect -> 0x46de), seq 951384415:951384440, ack 1423004894, win 502, options [nop,nop,TS val 1338891573 ecr 42560581], length 25

13:43:03.504750 IP (tos 0x8, ttl 64, id 6648, offset 0, flags [DF], proto TCP (6), length 351)

10.10.10.36.3306 > 10.10.10.31.43088: Flags [P.], cksum 0x2366 (correct), seq 1:300, ack 25, win 510, options [nop,nop,TS val 42796803 ecr 1338891573], length 299

13:43:03.504761 IP (tos 0x0, ttl 64, id 20280, offset 0, flags [DF], proto TCP (6), length 52)

10.10.10.31.43088 > 10.10.10.36.3306: Flags [.], cksum 0x287d (incorrect -> 0x839a), seq 25, ack 300, win 501, options [nop,nop,TS val 1338891575 ecr 42796803], length 0

13:43:03.506561 IP (tos 0x0, ttl 64, id 17618, offset 0, flags [DF], proto TCP (6), length 351)

10.10.10.31.3306 > 10.10.10.81.38478: Flags [P.], cksum 0x29d5 (incorrect -> 0xa13c), seq 300:599, ack 50, win 510, options [nop,nop,TS val 1230715385 ecr 3974977436], length 299

13:43:03.506948 IP (tos 0x8, ttl 64, id 15786, offset 0, flags [DF], proto TCP (6), length 52)

10.10.10.81.38478 > 10.10.10.31.3306: Flags [.], cksum 0x016e (correct), seq 50, ack 599, win 501, options [nop,nop,TS val 3974977441 ecr 1230715385], length 0测试写入,多次插入数据,观察数据包走向。

insert into jx_stu values(NULL, 1, "赵六", 22);第一次插入数据:

13:50:06.166385 IP (tos 0x8, ttl 64, id 50446, offset 0, flags [DF], proto TCP (6), length 105)

10.10.10.81.42170 > 10.10.10.31.3306: Flags [P.], cksum 0x5ede (correct), seq 2426399156:2426399209, ack 2620453011, win 501, options [nop,nop,TS val 3975400102 ecr 1231029962], length 53

13:50:06.167965 IP (tos 0x0, ttl 64, id 31543, offset 0, flags [DF], proto TCP (6), length 105)

10.10.10.31.46312 > 10.10.10.36.3306: Flags [P.], cksum 0x28b2 (incorrect -> 0xfe53), seq 1397525989:1397526042, ack 1295252074, win 502, options [nop,nop,TS val 1339314238 ecr 43160561], length 53

13:50:06.206841 IP (tos 0x0, ttl 64, id 58918, offset 0, flags [DF], proto TCP (6), length 52)

10.10.10.31.3306 > 10.10.10.81.42170: Flags [.], cksum 0x28aa (incorrect -> 0xe526), seq 1, ack 53, win 510, options [nop,nop,TS val 1231138086 ecr 3975400102], length 0

13:50:06.210011 IP (tos 0x8, ttl 64, id 47026, offset 0, flags [DF], proto TCP (6), length 52)

10.10.10.36.3306 > 10.10.10.31.46312: Flags [.], cksum 0x44b6 (correct), seq 1, ack 53, win 510, options [nop,nop,TS val 43219509 ecr 1339314238], length 0

13:50:06.313916 IP (tos 0x8, ttl 64, id 47027, offset 0, flags [DF], proto TCP (6), length 63)

10.10.10.36.3306 > 10.10.10.31.46312: Flags [P.], cksum 0x3837 (correct), seq 1:12, ack 53, win 510, options [nop,nop,TS val 43219613 ecr 1339314238], length 11

13:50:06.313938 IP (tos 0x0, ttl 64, id 31544, offset 0, flags [DF], proto TCP (6), length 52)

10.10.10.31.46312 > 10.10.10.36.3306: Flags [.], cksum 0x287d (incorrect -> 0x43b9), seq 53, ack 12, win 502, options [nop,nop,TS val 1339314384 ecr 43219613], length 0

13:50:06.316125 IP (tos 0x0, ttl 64, id 58919, offset 0, flags [DF], proto TCP (6), length 63)

10.10.10.31.3306 > 10.10.10.81.42170: Flags [P.], cksum 0x28b5 (incorrect -> 0xd8a2), seq 1:12, ack 53, win 510, options [nop,nop,TS val 1231138195 ecr 3975400102], length 11

13:50:06.316821 IP (tos 0x8, ttl 64, id 50447, offset 0, flags [DF], proto TCP (6), length 52)

10.10.10.81.42170 > 10.10.10.31.3306: Flags [.], cksum 0xe420 (correct), seq 53, ack 12, win 501, options [nop,nop,TS val 3975400253 ecr 1231138195], length 0第二次插入数据

13:50:22.318452 IP (tos 0x8, ttl 64, id 50448, offset 0, flags [DF], proto TCP (6), length 105)

10.10.10.81.42170 > 10.10.10.31.3306: Flags [P.], cksum 0x78bb (correct), seq 53:106, ack 12, win 501, options [nop,nop,TS val 3975416254 ecr 1231138195], length 53

13:50:22.318533 IP (tos 0x0, ttl 64, id 58920, offset 0, flags [DF], proto TCP (6), length 52)

10.10.10.31.3306 > 10.10.10.81.42170: Flags [.], cksum 0x28aa (incorrect -> 0x66df), seq 12, ack 106, win 510, options [nop,nop,TS val 1231154197 ecr 3975416254], length 0

13:50:22.320014 IP (tos 0x0, ttl 64, id 31545, offset 0, flags [DF], proto TCP (6), length 105)

10.10.10.31.46312 > 10.10.10.36.3306: Flags [P.], cksum 0x28b2 (incorrect -> 0xd84e), seq 53:106, ack 12, win 502, options [nop,nop,TS val 1339330390 ecr 43219613], length 53

13:50:22.320543 IP (tos 0x8, ttl 64, id 47028, offset 0, flags [DF], proto TCP (6), length 52)

10.10.10.36.3306 > 10.10.10.31.46312: Flags [.], cksum 0xc66e (correct), seq 12, ack 106, win 510, options [nop,nop,TS val 43235620 ecr 1339330390], length 0

13:50:22.327966 IP (tos 0x8, ttl 64, id 47029, offset 0, flags [DF], proto TCP (6), length 63)

10.10.10.36.3306 > 10.10.10.31.46312: Flags [P.], cksum 0xb950 (correct), seq 12:23, ack 106, win 510, options [nop,nop,TS val 43235627 ecr 1339330390], length 11

13:50:22.328025 IP (tos 0x0, ttl 64, id 31546, offset 0, flags [DF], proto TCP (6), length 52)

10.10.10.31.46312 > 10.10.10.36.3306: Flags [.], cksum 0x287d (incorrect -> 0xc65c), seq 106, ack 23, win 502, options [nop,nop,TS val 1339330398 ecr 43235627], length 0

13:50:22.330141 IP (tos 0x0, ttl 64, id 58921, offset 0, flags [DF], proto TCP (6), length 63)

10.10.10.31.3306 > 10.10.10.81.42170: Flags [P.], cksum 0x28b5 (incorrect -> 0x59bc), seq 12:23, ack 106, win 510, options [nop,nop,TS val 1231154209 ecr 3975416254], length 11

13:50:22.330686 IP (tos 0x8, ttl 64, id 50449, offset 0, flags [DF], proto TCP (6), length 52)

10.10.10.81.42170 > 10.10.10.31.3306: Flags [.], cksum 0x66c4 (correct), seq 106, ack 23, win 501, options [nop,nop,TS val 3975416267 ecr 1231154209], length 0为了验证读写分离是否生效,我们将master上mysql服务停止,然后从运维服务器(81)上连接代理(31)进行写操作。

#停止master mysql

systemctl stop mysql

# 写操作:

MySQL [(none)]> insert into jx_stu values(NULL, 1, "赵七", 22);

ERROR 10000 (null ): session was killed!!

# 读操作,第一次读取失败(master节点服务关闭),第二次读取成功,此时已切换到slave节点上。

MySQL [(none)]> select * from jx_stu;

ERROR 1044 (42000): poolName=writeGroup, no valid pools

MySQL [(none)]> select * from jx_stu;

ERROR 2006 (HY000): MySQL server has gone away

No connection. Trying to reconnect...

Connection id: 614793363

Current database: *** NONE ***

+----+---------+--------+-----+

| id | classId | name | age |

+----+---------+--------+-----+

| 1 | 1 | 张三 | 18 |

| 2 | 1 | 李四 | 19 |

| 3 | 2 | 王五 | 20 |

| 4 | 2 | 马六 | 21 |

| 5 | 1 | 赵六 | 22 |

| 6 | 1 | 赵六 | 22 |

| 7 | 1 | 赵六 | 22 |

| 8 | 1 | 赵六 | 22 |

+----+---------+--------+-----+

8 rows in set (0.014 sec)此时代理软件报错信息如下:

[root@jx-mysql-proxy-31 ~]# /opt/amoeba/bin/amoeba start

log4j:WARN log4j config load completed from file:/opt/amoeba/conf/log4j.xml

2024-12-09 13:29:07,202 INFO context.MysqlRuntimeContext - Amoeba for Mysql current versoin=5.1.45-mysql-amoeba-proxy-2.1.0-RC5

log4j:WARN ip access config load completed from file:/opt/amoeba/conf/access_list.conf

2024-12-09 13:29:07,351 INFO net.ServerableConnectionManager - Amoeba for Mysql listening on 0.0.0.0/0.0.0.0:3306.

2024-12-09 13:29:07,354 INFO net.ServerableConnectionManager - Amoeba Monitor Server listening on /0.0.0.0:25667.

java.lang.Exception: poolName=writeGroup, no valid pools

at com.meidusa.amoeba.net.poolable.MultipleLoadBalanceObjectPool.borrowObject(MultipleLoadBalanceObjectPool.java:183)

at com.meidusa.amoeba.mysql.handler.CommandMessageHandler.startSession(CommandMessageHandler.java:629)

at com.meidusa.amoeba.mysql.handler.MySqlCommandDispatcher.handleMessage(MySqlCommandDispatcher.java:123)

at com.meidusa.amoeba.mysql.net.MysqlClientConnection$2.run(MysqlClientConnection.java:291)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

java.lang.Exception: poolName=writeGroup, no valid pools

at com.meidusa.amoeba.net.poolable.MultipleLoadBalanceObjectPool.borrowObject(MultipleLoadBalanceObjectPool.java:183)

at com.meidusa.amoeba.mysql.handler.CommandMessageHandler.startSession(CommandMessageHandler.java:629)

at com.meidusa.amoeba.mysql.handler.MySqlCommandDispatcher.handleMessage(MySqlCommandDispatcher.java:123)

at com.meidusa.amoeba.mysql.net.MysqlClientConnection$2.run(MysqlClientConnection.java:291)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

评论区